How Does AR Really Work?

You’ve seen Pokémon GO, Harry Potter: Wizards Unite, and Agents of Discovery. You’ve heard of Google Glass and Microsoft’s HoloLens, and you’ve watched tons of movies like Avatar, Spiderman, and Detective Pikachu, where 3D animation seems to become part of the real world. But how does all this really work? How do we create augmented reality?

Augmented reality, or ‘AR,’ is what we get when we overlay digital video or imagery onto the real world. It should be complicated, but computers have become quite adept at it. First, they observe a real-world environment and create a digital representation of it. Then they put digital objects into the digital environment they’ve created. Finally, they superimpose the digital environment onto real footage, and this is what we see on the screen.

Sensors

Let’s consider AR on your smartphone. It all starts with your phone’s sensors: the camera, gyroscope, accelerometer, light sensor, proximity sensor, and maybe more, depending on the model of your phone. Your phone gathers data from these sensors and interprets it into information about its environment (much like how our brains work with our five senses). When your phone is missing some of these hardware elements (or perhaps has outdated versions of them), that’s when you may run into compatibility issues.

But that’s a bit vague. How exactly is data being interpreted? Well, your phone will consider the data that makes up an image and use algorithms to identify ‘feature points’ – points in an image that define planes and boundaries. A depth-from-motion algorithm is implemented to determine how far away objects are, and feature points and data from the motion sensors are used to determine the position and orientation of the camera. Together, these components – the position and orientation of the camera and the locations of surfaces – are combined to form a 3D, digital model of the environment. Your phone can also get an idea of the room’s ambient light using the camera or a separate light sensor.

Photo by XR Expo on Unsplash

Above: A virtual reality (VR) headset identifies and tracks a man’s hands. Unlike AR, which integrates computer graphics into the real world, VR immerses players in a completely virtual environment with the use of a headset. Hand tracking allows VR games to be played without physical controllers.

Now, we don’t see this model when we use AR. What we see is a video of, well, reality. Here’s where we get into the graphics. The reason it’s necessary to make a model of the room is so that when graphics are added over top of the footage, they can be interactive. They can be positioned within a room and made to follow the laws of physics and perspective as they relate to the real world. For example, if you place an object on a table, ideally it should appear to sit on the table (not fall through it or float away) and reflect light. If you place an object in one room and walk into another, the object should get smaller as you walk away, then disappear behind the wall when you turn the corner. We take a lot of this for granted, but it’s the little things that create the illusion.

Graphics

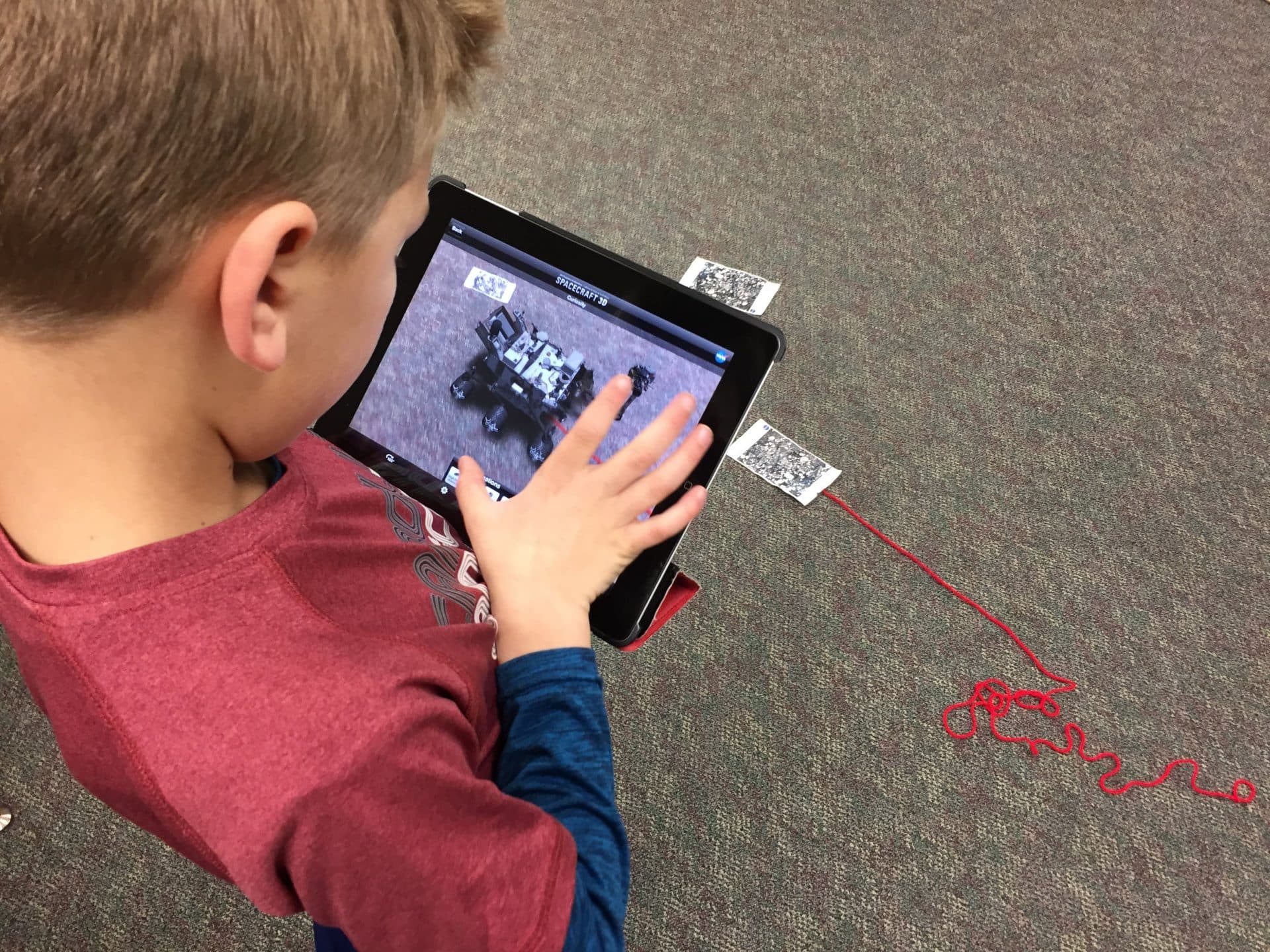

“Spacecraft 3D app Barrett NASA Explorer School” by Barrett.Discovery is licensed under CC BY 2.0

Above: A marker-based AR app showing a model spacecraft. NASA has a number of apps you can check out to learn more about space exploration, including Spacecraft AR, in which you can view models of craft like the Curiosity rover.

Where does your phone get these graphics from in the first place? You’ve probably already heard about computer-generated imagery, or ‘CGI.’ It’s been around for decades, but the technology to create it wasn’t very portable in the beginning. Nowadays, our mobile devices are perfectly capable of working with 3D models. The application of physics to these models is nothing new either; it just takes a bit of math. Think of your favorite 3D-animated video game. It’s bound to use physics! Where would Mario Kart be without gravity and momentum?

When you install an AR app on your phone, you’re likely downloading some 3D objects as part of the package. Other times, an app might download resources as needed over WiFi or data.

“Spacecraft 3D app Barrett NASA Explorer School” by Barrett.Discovery is licensed under CC BY 2.0

Above: A marker-based AR app showing a model spacecraft. NASA has a number of apps you can check out to learn more about space exploration, including Spacecraft AR, in which you can view models of craft like the Curiosity rover.

Tracking

Really, the tricky part of creating AR isn’t accessing 3D objects, and it isn’t applying physics. What’s difficult is tracking the locations of surfaces and objects as they move. After all, reality happens in real time. Your phone accomplishes this using what are called anchors and trackables. If you want a virtual box to sit on the floor, your phone will create an anchor there to mark the position and orientation of the box. As you move the camera around, your phone keeps track of the changing positions of ‘trackables’ such as planes and feature points. If it knows the position of an anchor relative to a trackable, it can update a virtual object’s position as the trackable moves.

Another option is what’s called marker-based tracking, which involves graphics being anchored to a particular real-world image (an example of this is Merge Cube). Snapchat uses marker-based tracking on faces.

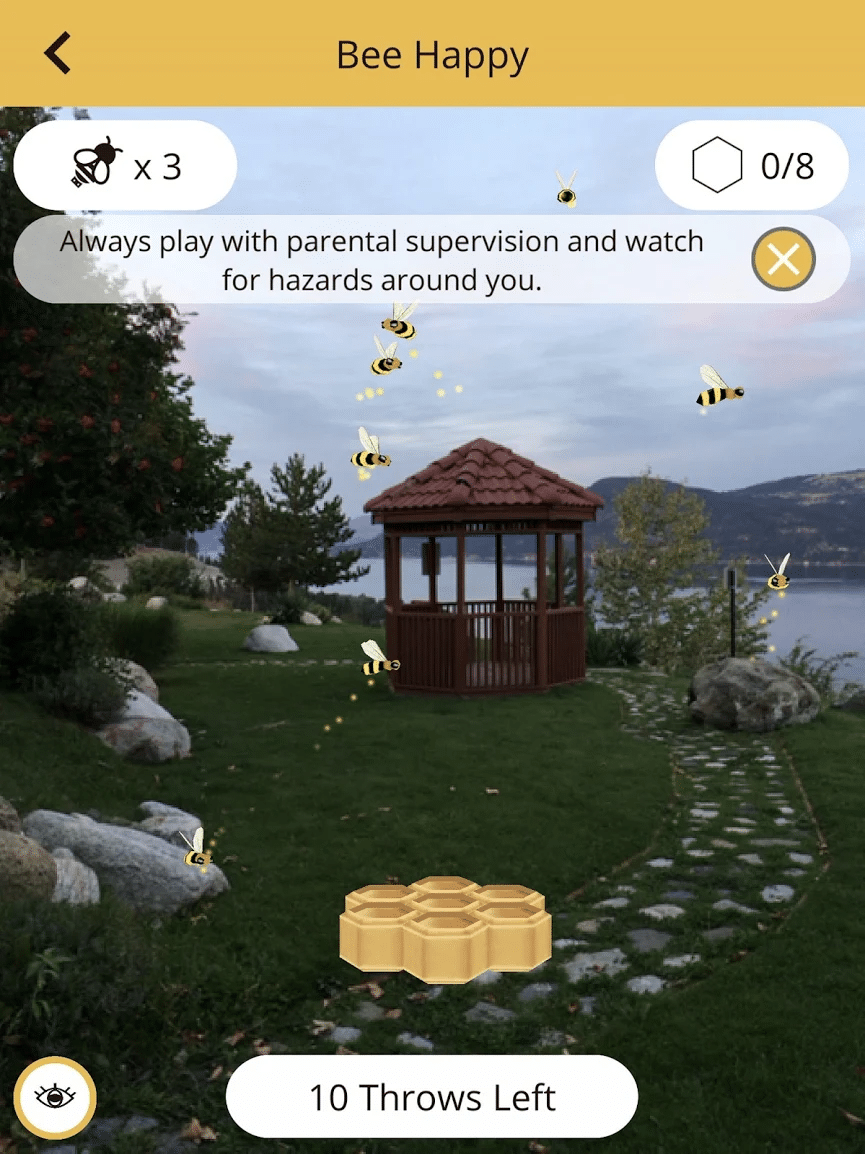

The AR Challenges in Agents of Discovery are an example of marker-less tracking – models interact with the ground and other planes around you. (So make sure you have enough floorspace before you start!) The way you can interact with AR games depends on what app you’re using.

Putting it all together

Of course, none of this would work at all if these concepts couldn’t be melded together and run smoothly on your phone. The film industry has been using some of these techniques for ages, but what’s cool about AR is that your device does everything in real time. It’s continuously reevaluating the environment and updating what you see on the screen. That’s no small feat of processing power! Sometimes we take for granted just how smart our smartphones really are.

At present, AR has all sorts of uses, but we’re still just getting started. Sportscasters and newscasters are using AR in their broadcasts, stores are using it to create new customer experiences, construction companies and manufacturers are using AR and MR (mixed reality) to convey instructions and designs, and Google Maps is even changing the way we navigate with ‘Live View.’ And of course, we can’t forget about the gaming industry – it’s working non-stop to bring us bigger and better AR experiences, just for the sake of entertainment.

In the field of education, AR technology is booming. It’s already changing the way we teach and learn. We already know that linking activity and learning helps students be academically successful, so it’s only logical that companies (such as Agents of Discovery) have begun to make AR apps that function as learning tools. But this is all just the tip of the iceberg! Keep your eyes peeled for new developments in AR, because the best is yet to come.

If you’re looking to see AR in action, why not download the (free) Agents of Discovery app on your smartphone? You can look for Missions to play at a Park near you, or try an at-home Mission!